Generation

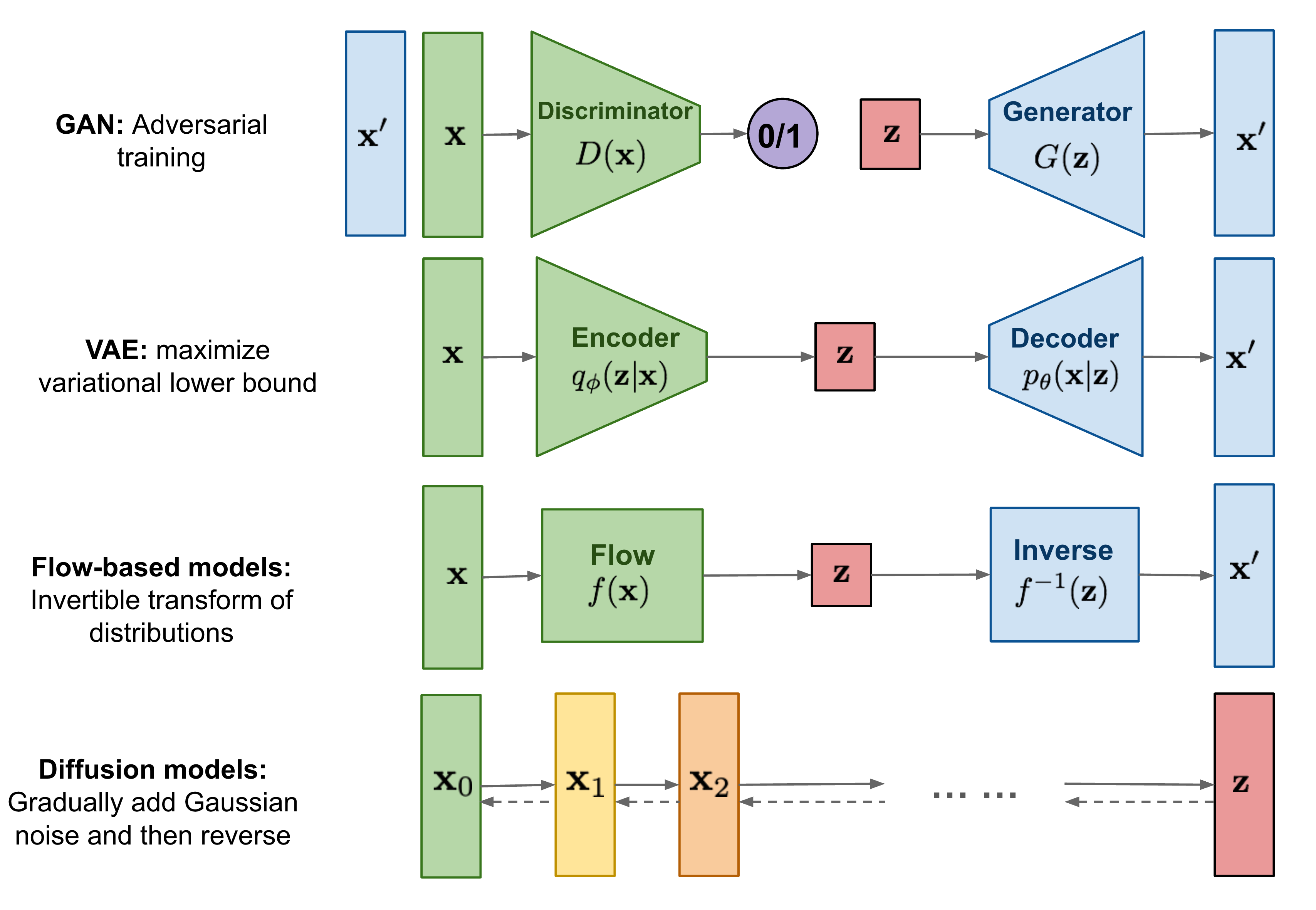

Generative Adversarial Network (GAN)

GAN models are known for potentially unstable training and less diversity in generation due to their adversarial training nature.

VAE

VAE relies on a surrogate loss.

- 如何简单易懂地理解变分推断(variational inference)?

- Inference Suboptimality in Variational Autoencoders

- The Reparameterization Trick

- Paper List

Flow-based Model

Flow models have to use specialized architectures to construct reversible transform.

Normalizing flows is a class of generative models focusing on mapping a complex probability distribution to a simple distribution such as a Gaussian.

Diffusion Model

Reference

Conditional Generation

consider learning a conditional mapping function which generates an output . Our goal is to learn a multi-modal mapping such that an input $x$ can be mapped to multiple and diverse ouputs in $\mathcal Y$ depending on the latent factors encoded in $\mathbf z \in \mathcal Z$.

offen suffers from mode-collapse problem.